The world of AI chatbots and assistants keeps evolving, and Google’s Gemini family sits at the forefront, offering two distinct options – Gemini Pro and Gemini Advanced. But with price tags attached, choosing the right AI partner can be tricky. Fear not, curious reader, for this article dissects the differences and their costs, helping you select the most cost-effective and suitable option for your needs.

Lifting the Hood: Pro vs. Advanced

Imagine Gemini Pro as the highly trained athlete, excelling at various tasks with agility and strength. It tackles text generation, translation, and code writing with impressive efficiency, currently serving developers and enterprises. Think building chatbots, translating documents, or even getting assistance with coding projects. At limited free access through Google AI Studio and paid access through Google Cloud Vertex AI, it offers flexibility.

On the other hand, Gemini Advanced emerges as the AI scholar, boasting deeper understanding and nuanced reasoning. Powered by the more advanced Ultra 1.0 model, it’s tailored for individual users, offering a personalized experience. Think complex question-answering, multimodal interactions (like generating images based on descriptions), and even acting as a personal coding tutor. However, this scholar comes with a price tag – a Google One AI Premium subscription costing $19.99 per month.

Key Differences and Their Costs:

Feature Gemini Pro Gemini Advanced Target Audience Developers, Enterprises Individual Users Model Pro 1.0 Ultra 1.0 Capabilities Text generation, translation, code writing Complex reasoning, multimodal interactions, advanced coding features, personalization Current Access Limited free access (60 requests per minute) through AI Studio, Paid access through Cloud Vertex AI (variable pricing) Paid access through Google One AI Premium subscription ($19.99/month) Language Support 38 languages English only (expanding) So, which Gemini should you choose? Consider your needs and budget:

- Developers and enterprises: If budget is a concern, Gemini Pro’s free tier or Cloud Vertex AI’s pricing might be more appealing.

- Individual users: If you seek a versatile, learning AI companion and can justify the $19.99 monthly subscription, Gemini Advanced offers advanced features and personalization.

Beyond the Scoreboard:

Remember, both versions are evolving, so their capabilities and pricing might change. Consider these additional factors:

- Privacy: Both respect user privacy, but Advanced offers more customization.

- Future Potential: Advanced’s focus on personalization and multimodal interactions hints at exciting future features.

Ultimately, the best Gemini for you depends on your unique needs and budget. Experiment, explore, and discover the AI partner that unlocks your potential at the right price point!

popular news & articles

weekly popular

latest video

editor’s pick

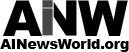

Imagine a world where robots not only perform tasks but also understand your instructions and respond to your questions. This future might be closer than you think. Companies like OpenAI and Figure are making significant strides in bridging the gap between artificial intelligence (AI) and the physical world.

One intriguing development involves the potential marriage of OpenAI’s expertise in language and visual understanding with Figure’s proficiency in building nimble robots. Here’s how it could work:

- OpenAI’s AI prowess: Imagine a sophisticated AI that can not only see the world through cameras but also understand what it sees. OpenAI’s models are like digital brains, trained on massive datasets of images and text to recognize objects, interpret situations, and even engage in conversation.

- Figure’s robotic agility: While AI understands the world, robots need the physical capabilities to navigate it. Figure specializes in creating robots with dexterous movements, allowing them to grasp objects, move around obstacles, and even complete delicate tasks.

- The powerful combination: By potentially combining these strengths, robots could be equipped with the ability to:

- Follow instructions: No more complex programming. Robots could understand natural language commands like “Pick up that box and place it on the table.”

- Respond to their environment: Imagine a robot that sees a dropped object and asks “Would you like me to pick that up?”

- Learn and adapt: Through continuous interaction with the environment and human input, these AI-powered robots could constantly improve their understanding and response capabilities.

This integration of AI and robotics holds immense promise for various applications:

- Manufacturing: Robots that can understand instructions and adapt to changing situations could revolutionize assembly lines.

- Elder care: Robots equipped with conversational AI could provide companionship and assistance to senior citizens.

- Search and rescue: AI-powered robots could navigate disaster zones, locate survivors, and even communicate with them.

Important to note: While the potential is significant, it’s crucial to remember that this technology is still under development. Challenges remain, such as ensuring robots can handle complex situations, interpret nuances of human communication, and operate safely in real-world environments.

However, the collaboration between OpenAI and Figure paves the way for a future where robots seamlessly interact with the world around them, making our lives easier and potentially opening doors to groundbreaking advancements in various fields.

Imagine a world where robots not only perform tasks but also understand your instructions and respond to your questions. This future might be closer than you think. Companies like OpenAI and Figure are making significant strides in bridging the gap between artificial intelligence (AI) and the physical world.

One intriguing development involves the potential marriage of OpenAI’s expertise in language and visual understanding with Figure’s proficiency in building nimble robots. Here’s how it could work:

- OpenAI’s AI prowess: Imagine a sophisticated AI that can not only see the world through cameras but also understand what it sees. OpenAI’s models are like digital brains, trained on massive datasets of images and text to recognize objects, interpret situations, and even engage in conversation.

- Figure’s robotic agility: While AI understands the world, robots need the physical capabilities to navigate it. Figure specializes in creating robots with dexterous movements, allowing them to grasp objects, move around obstacles, and even complete delicate tasks.

- The powerful combination: By potentially combining these strengths, robots could be equipped with the ability to:

- Follow instructions: No more complex programming. Robots could understand natural language commands like “Pick up that box and place it on the table.”

- Respond to their environment: Imagine a robot that sees a dropped object and asks “Would you like me to pick that up?”

- Learn and adapt: Through continuous interaction with the environment and human input, these AI-powered robots could constantly improve their understanding and response capabilities.

This integration of AI and robotics holds immense promise for various applications:

- Manufacturing: Robots that can understand instructions and adapt to changing situations could revolutionize assembly lines.

- Elder care: Robots equipped with conversational AI could provide companionship and assistance to senior citizens.

- Search and rescue: AI-powered robots could navigate disaster zones, locate survivors, and even communicate with them.

Important to note: While the potential is significant, it’s crucial to remember that this technology is still under development. Challenges remain, such as ensuring robots can handle complex situations, interpret nuances of human communication, and operate safely in real-world environments.

However, the collaboration between OpenAI and Figure paves the way for a future where robots seamlessly interact with the world around them, making our lives easier and potentially opening doors to groundbreaking advancements in various fields.

Imagine a world where robots not only perform tasks but also understand your instructions and respond to your questions. This future might be closer than you think. Companies like OpenAI and Figure are making significant strides in bridging the gap between artificial intelligence (AI) and the physical world.

One intriguing development involves the potential marriage of OpenAI’s expertise in language and visual understanding with Figure’s proficiency in building nimble robots. Here’s how it could work:

- OpenAI’s AI prowess: Imagine a sophisticated AI that can not only see the world through cameras but also understand what it sees. OpenAI’s models are like digital brains, trained on massive datasets of images and text to recognize objects, interpret situations, and even engage in conversation.

- Figure’s robotic agility: While AI understands the world, robots need the physical capabilities to navigate it. Figure specializes in creating robots with dexterous movements, allowing them to grasp objects, move around obstacles, and even complete delicate tasks.

- The powerful combination: By potentially combining these strengths, robots could be equipped with the ability to:

- Follow instructions: No more complex programming. Robots could understand natural language commands like “Pick up that box and place it on the table.”

- Respond to their environment: Imagine a robot that sees a dropped object and asks “Would you like me to pick that up?”

- Learn and adapt: Through continuous interaction with the environment and human input, these AI-powered robots could constantly improve their understanding and response capabilities.

This integration of AI and robotics holds immense promise for various applications:

- Manufacturing: Robots that can understand instructions and adapt to changing situations could revolutionize assembly lines.

- Elder care: Robots equipped with conversational AI could provide companionship and assistance to senior citizens.

- Search and rescue: AI-powered robots could navigate disaster zones, locate survivors, and even communicate with them.

Important to note: While the potential is significant, it’s crucial to remember that this technology is still under development. Challenges remain, such as ensuring robots can handle complex situations, interpret nuances of human communication, and operate safely in real-world environments.

However, the collaboration between OpenAI and Figure paves the way for a future where robots seamlessly interact with the world around them, making our lives easier and potentially opening doors to groundbreaking advancements in various fields.

Forget sleek gadgets and flashy press conferences – Apple’s latest innovation might not come wrapped in a shiny box, but it packs a powerful punch nonetheless. The tech giant recently unveiled “MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training“, a research paper outlining a groundbreaking approach to artificial intelligence (AI).

Here’s why this seemingly dry research has the potential to be a game-changer: MM1 delves into the world of Multimodal Large Language Models (MLLMs). Unlike traditional LLMs that thrive on text and code, MM1 focuses on training AI models to understand and process both text and images simultaneously.

Think of it this way: current AI assistants like Siri can understand your spoken words, but struggle to grasp the context of a photo you show them. MM1 bridges that gap. By training on a combination of text descriptions, actual images, and even text-image pairings, MM1 unlocks a whole new level of AI comprehension.

The implications are exciting. Imagine a future where your iPhone camera doesn’t just capture memories, but becomes an intelligent tool. With MM1, your virtual assistant could translate a foreign restaurant menu displayed on your screen, or automatically generate witty captions for your travel photos.

The possibilities extend beyond simple image recognition. MM1 has shown success in tasks like visual question answering, allowing it to analyze an image and answer your questions based on the combined visual and textual data. This opens doors for smarter search functionalities or educational tools that seamlessly blend text and image-based learning.

While the paper itself focuses on the research side, it’s clear Apple is laying the groundwork for future product integration. MM1’s success could pave the way for a wave of innovative features across Apple’s devices and software, fundamentally reshaping how we interact with technology.

So, while MM1 itself isn’t a product launch, it signifies a crucial step forward for Apple’s AI ambitions. This research paves the way for a future where our devices not only understand our words, but also see the world through our eyes, ushering in a new era of intuitive and intelligent human-machine interaction.

Forget sleek gadgets and flashy press conferences – Apple’s latest innovation might not come wrapped in a shiny box, but it packs a powerful punch nonetheless. The tech giant recently unveiled “MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training“, a research paper outlining a groundbreaking approach to artificial intelligence (AI).

Here’s why this seemingly dry research has the potential to be a game-changer: MM1 delves into the world of Multimodal Large Language Models (MLLMs). Unlike traditional LLMs that thrive on text and code, MM1 focuses on training AI models to understand and process both text and images simultaneously.

Think of it this way: current AI assistants like Siri can understand your spoken words, but struggle to grasp the context of a photo you show them. MM1 bridges that gap. By training on a combination of text descriptions, actual images, and even text-image pairings, MM1 unlocks a whole new level of AI comprehension.

The implications are exciting. Imagine a future where your iPhone camera doesn’t just capture memories, but becomes an intelligent tool. With MM1, your virtual assistant could translate a foreign restaurant menu displayed on your screen, or automatically generate witty captions for your travel photos.

The possibilities extend beyond simple image recognition. MM1 has shown success in tasks like visual question answering, allowing it to analyze an image and answer your questions based on the combined visual and textual data. This opens doors for smarter search functionalities or educational tools that seamlessly blend text and image-based learning.

While the paper itself focuses on the research side, it’s clear Apple is laying the groundwork for future product integration. MM1’s success could pave the way for a wave of innovative features across Apple’s devices and software, fundamentally reshaping how we interact with technology.

So, while MM1 itself isn’t a product launch, it signifies a crucial step forward for Apple’s AI ambitions. This research paves the way for a future where our devices not only understand our words, but also see the world through our eyes, ushering in a new era of intuitive and intelligent human-machine interaction.

Forget sleek gadgets and flashy press conferences – Apple’s latest innovation might not come wrapped in a shiny box, but it packs a powerful punch nonetheless. The tech giant recently unveiled “MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training“, a research paper outlining a groundbreaking approach to artificial intelligence (AI).

Here’s why this seemingly dry research has the potential to be a game-changer: MM1 delves into the world of Multimodal Large Language Models (MLLMs). Unlike traditional LLMs that thrive on text and code, MM1 focuses on training AI models to understand and process both text and images simultaneously.

Think of it this way: current AI assistants like Siri can understand your spoken words, but struggle to grasp the context of a photo you show them. MM1 bridges that gap. By training on a combination of text descriptions, actual images, and even text-image pairings, MM1 unlocks a whole new level of AI comprehension.

The implications are exciting. Imagine a future where your iPhone camera doesn’t just capture memories, but becomes an intelligent tool. With MM1, your virtual assistant could translate a foreign restaurant menu displayed on your screen, or automatically generate witty captions for your travel photos.

The possibilities extend beyond simple image recognition. MM1 has shown success in tasks like visual question answering, allowing it to analyze an image and answer your questions based on the combined visual and textual data. This opens doors for smarter search functionalities or educational tools that seamlessly blend text and image-based learning.

While the paper itself focuses on the research side, it’s clear Apple is laying the groundwork for future product integration. MM1’s success could pave the way for a wave of innovative features across Apple’s devices and software, fundamentally reshaping how we interact with technology.

So, while MM1 itself isn’t a product launch, it signifies a crucial step forward for Apple’s AI ambitions. This research paves the way for a future where our devices not only understand our words, but also see the world through our eyes, ushering in a new era of intuitive and intelligent human-machine interaction.

Forget sleek gadgets and flashy press conferences – Apple’s latest innovation might not come wrapped in a shiny box, but it packs a powerful punch nonetheless. The tech giant recently unveiled “MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training“, a research paper outlining a groundbreaking approach to artificial intelligence (AI).

Here’s why this seemingly dry research has the potential to be a game-changer: MM1 delves into the world of Multimodal Large Language Models (MLLMs). Unlike traditional LLMs that thrive on text and code, MM1 focuses on training AI models to understand and process both text and images simultaneously.

Think of it this way: current AI assistants like Siri can understand your spoken words, but struggle to grasp the context of a photo you show them. MM1 bridges that gap. By training on a combination of text descriptions, actual images, and even text-image pairings, MM1 unlocks a whole new level of AI comprehension.

The implications are exciting. Imagine a future where your iPhone camera doesn’t just capture memories, but becomes an intelligent tool. With MM1, your virtual assistant could translate a foreign restaurant menu displayed on your screen, or automatically generate witty captions for your travel photos.

The possibilities extend beyond simple image recognition. MM1 has shown success in tasks like visual question answering, allowing it to analyze an image and answer your questions based on the combined visual and textual data. This opens doors for smarter search functionalities or educational tools that seamlessly blend text and image-based learning.

While the paper itself focuses on the research side, it’s clear Apple is laying the groundwork for future product integration. MM1’s success could pave the way for a wave of innovative features across Apple’s devices and software, fundamentally reshaping how we interact with technology.

So, while MM1 itself isn’t a product launch, it signifies a crucial step forward for Apple’s AI ambitions. This research paves the way for a future where our devices not only understand our words, but also see the world through our eyes, ushering in a new era of intuitive and intelligent human-machine interaction.